Building TIER cloud infrastructure as code

This post is Part 2 in the TIER cloud infrastructure series. Our current cloud infrastructure setup will be updated from version 2 to version 3 over the next months. See post TIER infrastructure landscape and centralized authentication on Okta - Part 1 for a brief evolutionary background and stay tuned for v3.

Preface

Infrastructure as code – No exceptions!

No manual creation or change of AWS or Kubernetes resources. Never! Nowhere! This is a policy from Amazon’s Day 1 Culture that we follow in TIER.

👉 Apply this rule right now. It will save you later on. And while you are on it – make sure the rule is actually enforced. We will provide more information on this topic in the next post.

In TIER we followed several steps that helped our journey there. Some of the steps include:

Global use of Terraform: Terraform as language of choice.

This comes naturally as Terraform is a) the de-facto standard in IaC today and b) has the broadest eco-system support through community and vendor providers.

One infrastructure repository per team: each team manages one or more services, while a service is managed by exactly one team. We bundled all team resources in a one repository due to the reasons such as independency and promotion of DevOps culture in the teams.

Terraform as CI with final approval of the administrative team: This approach removes a majority of pain points among which are permission issues, local changes forgotten to check in, version mismatch, state locks etc. And the main advantage is that it allows to answer the questions like who did what and when.

The approval is a bottleneck and subject to change. If you trust your developers, allow them to apply the change. Hint: make sure things are only added, not replaced or destroyed

Sandbox account with Administrator permissions for everybody: To let our developers play around, develop and test new modules, the sandbox account is free for all (see below).

Cleanup of the sandbox is a different topic. Any pointers to usable tooling (which supports keeping some things alive, based on tags) are very welcome!

Terraform module registry: We maintain a collection of opinionated Terraform modules to have Encrypted-at-Rest and -in-Transit enabled by default, backups turned on etc.. We wrote our own registry for that, more on it in a future post, but here’s some code for you: TierMobility/boring-registry

The three environments

We maintain three general environments - sandbox, staging and production.

Sandbox Environment

- everyone can have administrative access

- everyone can test and play, but should bear in mind that the account will be thoroughly cleaned every now and then

- the infrastructure in there may break without any notice

Staging Environment

- access is more restricted but reading everything permission is possible

- infrastructure must be created as Code

- infrastructure reflects production; however most likely not its power and sizing

Production Environment

- access is restricted

- infrastructure must be deployed as Code via tooling

Team infrastructure project

👉 if your teams are stable, this team-centered setup works well. If your teams or services fluctuate it is preferable to go service-centered. The migration process will be a part of another blog post series.

We structure our code around teams and each team gets its own infrastructure repository (detailed example below).

There we reflect our stages at the top level and inside each stage we set up the team permissions and instantiate services. Each service could potentially be run in different regions. The final set up of the actual resources takes place in the regions. In TIER we have explicit (and thus duplicated) code instead of parameterized setups. From our perspective this is, well exactly that, explicit. It requires more discipline to promote changes from sandbox to production, but shows exactly what is deployed – no variables, no interpolations needed.

This listing shows the Terraform module structure we currently use.

<team-infra-repository>

.

├── production/

│ ├── aws.tf

│ ├── okta.tf

│ ├── kubernetes.tf

│ ├── vault.tf

│ ├── example-service-foo/

│ │ ├── eu-central-1

│ │ │ └── main.tf

│ │ ├── eu-west-3

│ │ └── main.tf

│ ├── example-service-bar/

│ └── main.tf

│

├── staging/

│ ├── example-service-foo

│ └── example-service-bar

│

└── sandbox/

<production/main.tf>

module "example-service-foo" {

source = "./example-service-foo"

environment = "production"

team = "ring-0"

service = "example-service-foo"

region = "global"

}

<production/example-service-foo/main.tf>

module "example-service-foo-eu-central-1" {

source = "./eu-central-1"

service = var.service

region = "eu-central-1"

team = var.team

environment = var.environment

}

Terraform Continuous Integration

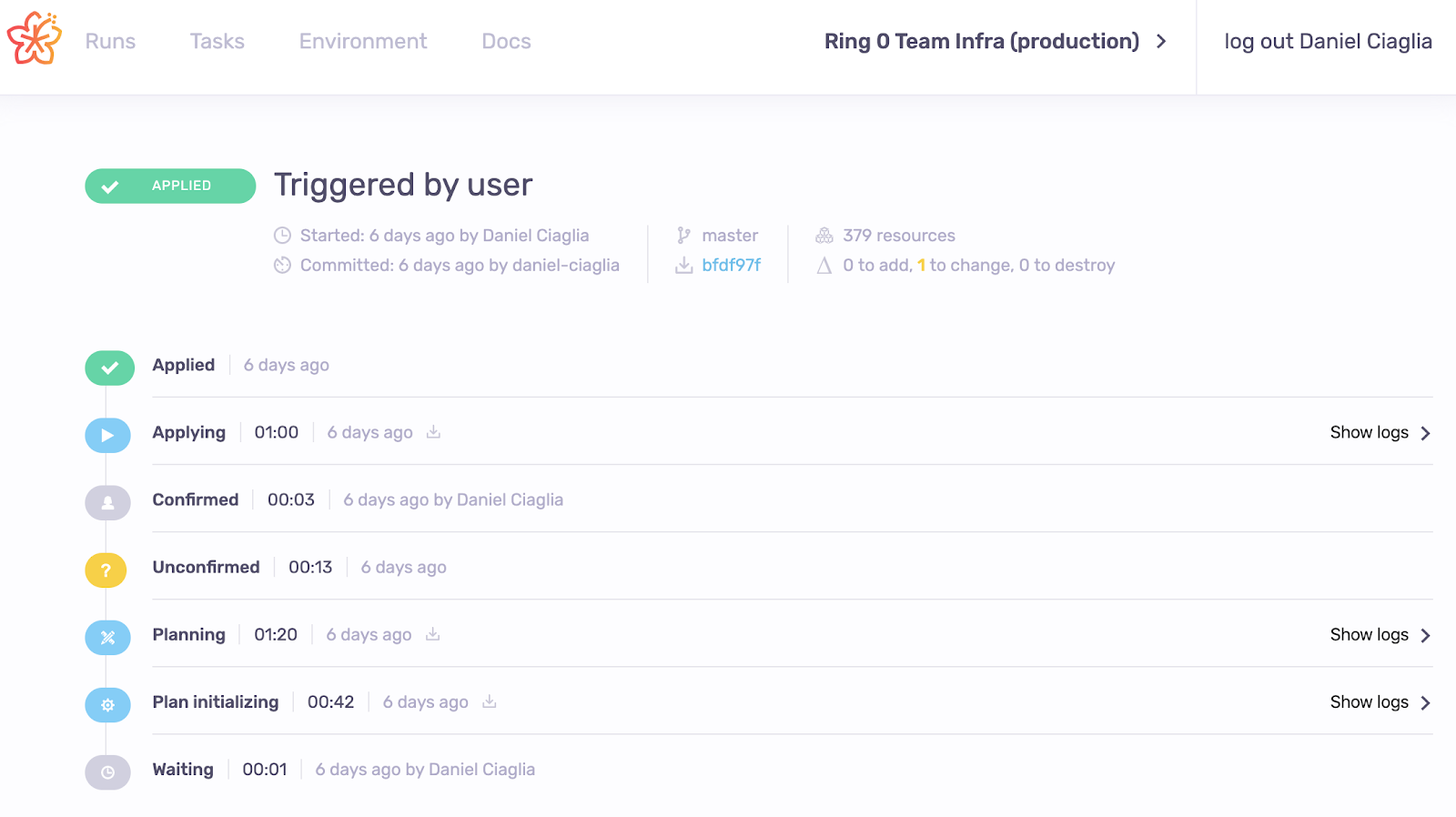

We run Terraform in CI; currently in Geopoiesis, now also in their successor spacelift.io.

👉 Avoid running Terraform locally and host it in CI. You will end up gaining audits, permission management, approvals etc. and avoid version conflicts, timing issues, local-only code and changes.

Read more

In another post we describe how data are exchanged between different stacks wo/ exposing the whole terraform state - we called this Terraform Configuration IntereXchange - TF-CIX. The post will be published soon, stay tuned!

The series

- Building TIER cloud infrastructure as code - Part 1 // Landscape and Centralized Authentication

- Building TIER cloud infrastructure as code - Part 2 // Environments and Team based code

- Building TIER cloud infrastructure as code - Part 3 // TF-CIX as an approach to share information between terraform stacks