What is Access Management and why do we need it?

In this post, we will discuss how we manage access control for humans inside kubernetes in TIER, using their AWS roles.

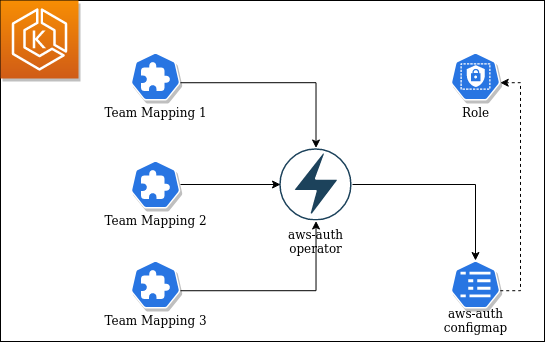

In TIER we use the aws-auth-operator for managing user access to kubernetes. It provides us with an opportunity to set up the configuration in code and have a clear audit log. Clearly, there are other ways to manage access, too. However, they did not work for us for a number of reasons.

We will also explain why we wrote the aws-auth-operator and why you should also take a closer look at this solution.

What is the problem?

When using a central Identity Provider and Federated Users in an AWS landscape, those users must be mapped into Kubernetes access control. AWS EKS does this mapping via a centralized ConfigMap residing in the kube-system namespace called aws-auth. The aws-auth ConfigMap is mapping AWS roles and users to Kubernetes roles, thus achieving access control from AWS Land to Kubernetes Land. Still, there is a question left.

How to manage a central resource with infrastructure code consisting of many individual team repositories? (see this post for details of our team base infrastructure repositories)

Moreover, the central ConfigMap is not as friendly and flexible as it could be. This is an example ConfigMap from AWS’ documentation

apiVersion: v1

data:

mapRoles: |

- rolearn: <arn:aws:iam::111122223333:role/example-operator>

username: example-operator

groups:

- example-viewers

- example-editors

mapUsers: |

- userarn: <arn:aws:iam::111122223333:user/admin>

username: <admin>

groups:

- <system:masters>

- userarn: <arn:aws:iam::111122223333:user/ops-user>

username: <ops-user>

groups:

- <system:masters>

Since we are using terraform to set up our clusters, the ConfigMap is configured by the Terraform EKS module. This is fine initially but we want to add more IAM roles to it and prevent manual editing of the ConfigMap.

Speaking of manual work, there were times when TIER rangers (people who take care of the fleet in the streets) used to collect 100% of the fleet every single night. Time flies!

There is also no config validation and audit log, which complicates the monitoring process.

The other issue is access and permissions. Access rights are only visible from within Kubernetes and the group rights can be added only by admins.

All these issues complicate the situation and require improvements.

Meet the aws-auth-operator

The solution for the problems above is the aws-auth-operator, the central management entity for the aws-auth ConfigMap. It is based on the Kubernetes operator pattern, which is configuration automation beyond the automations Kubernetes already provides. It allows the use of custom resources which then could be used to trigger certain actions.

The aws-auth-operator is based on the Kopf framework and has a decentralized configuration.

How does it work? The aws-auth-operator re-constructs the central aws-auth configuration (configuring access from AWS world to Kubernetes land) based on individual fragments, allowing a flexible setup for the ever-changing teams.

There are several advantages of this approach:

- configuration is set up in code

- log of all changes to stdout

- changelog is available

- more integrations through Kubernetes events possible

Here is how a configuration file looks like for the search-and-ride team:

apiVersion: tier.app/v1

kind: AwsAuthMapping

metadata:

name: search-and-ride

spec:

mappings:

- groups:

- search-and-ride-viewers

- search-and-ride-editors

rolearn: arn:aws:iam::0123456789:role/team-search-and-ride-operator

username: team-search-and-ride-operator

A module for integration in Terraform is structured in the following way:

# Custom Module to apply CRD

module "aws-auth" {

source = "terraform.tier-services.io/tier/aws-auth/kubernetes"

version = "~> 1.0"

clustername = module.kubernetes-data.eks-cluster-id

region = "eu-central-1"

cluster_endpoint = module.kubernetes-data.eks-cluster-endpoint

cluster_ca_certificate = module.kubernetes-data.eks-ca-data-base64

team = local.metadata.team

map_roles = [

{

rolearn = aws_iam_role.operator_role.arn

username = local.auth.operator_role_name

groups = ["${local.metadata.team}-viewers", "${local.metadata.team}-editors"]

},

{

rolearn = aws_iam_role.spectator_role.arn

username = local.auth.spectator_role_name

groups = ["${local.metadata.team}-viewers"]

},

]

}

To actually allow access to different Kubernetes Resources we need to specify a Role, that has the required permissions and a RoleBinding or ClusterRoleBinding to assign those permissions to the user or role:

# Cluster Role Binding to map group to actual ClusterRole/Role

resource "kubernetes_cluster_role_binding" "viewers" {

metadata {

name = "search-and-ride-viewers"

}

role_ref {

api_group = "rbac.authorization.k8s.io"

kind = "ClusterRole"

name = "view"

}

subject {

kind = "Group"

name = "search-and-ride-viewers"

api_group = "rbac.authorization.k8s.io"

}

}

A possible audit log:

[2020-10-13 23:00:26,530] Kopf.objects [INFO] [kube-system/aws-auth] Change to aws-auth configmap:

[("add",

"mapRoles",

[

(30,

{

"username":"example-operator",

"groups":[

"example-team-infra-viewers",

"example-team-infra-editors"

],

"rolearn":"arn:aws:iam::0123456789:role/example-operator"

}),

(31,

{

"username":"example-spectator",

"groups":[

"example-team-infra-viewers"

],

"rolearn":"arn:aws:iam::0123456789:role/example-spectator"

})

])

]

Conclusion

The aws-auth-operator allows us to have a flexible setup for the ever-changing teams, their AWS roles and representation in kubernetes. We love it this way!

Check the TierMobility/aws-auth-operator repo on Github, contribute, give feedback and give it a star ⭐